Chris Lengerich

How do we make scientists and engineers 100x more productive to solve problems that matter?

Deep learning TL and investor working towards open-sourcing finance, science and psychology as a multi-year plan to answer that question:

- Psych -> contrastive value theory (mostly done, 2017-2023)

- Contrastive value theory -> personal, self-learning AI (2023-current)

- Personal AI x alignment via differentiable credit -> collaborative games that lead to flow

- Flow, in generality -> systematic breakthroughs

- Systematic breakthroughs -> understanding of disease mechanisms

- Understanding of disease mechanisms -> health, in generality

Investment

The world is full of places where thoughtful time or money can deliver 10x returns on happiness and efficiency. Many of the best entrepreneurs and CEOs also tend to think like investors (Stripe, etc.). I don't like labels, but if you do, investment-oriented thinking typically means being empirical above all, which leads to being contrastivist, which leads to both positive and negative marginal analysis (underlies contrastive value theory).

Started a private ~103-person wealth management group called First Cap which has delivered strong

returns for members. Investments historically have returned 30%-170% in 1-2 months with a max drawdown

of 7%, and the group has been running for over three years through several macro periods. We're a bunch

of nice graduates of top PhD programs (Stanford, MIT, etc.), successful entrepreneurs and financial

experts building scalable, diversified investment strategies even our parents can invest in. Personal

growth investments include SpaceX and Impossible Foods.

For angel investments, I'm a Venture Partner at Pioneer Fund and occasionally advise VCs like Zhenfund. I defer most angel to Pioneer

and YC but will support friends and have made first-round or early investments in Orca.so (tokens),

inito.com, Samos Insurance,

Alternativ, Storytell.ai, Flyx

.ai, Lutra.ai, Second.dev,

Engage Bio, Switch

Bioworks, VitaDAO (tokens) and Vcreate.

Investing your time as an entrepreneur can be much more scientific and efficient than it is right now. I wrote a collection of articles to put first principles and empirical grounding behind growing yourself through early-stage, which will hopefully increase the hit rate of the ecosystem (see Essays). Hopefully, this will be helpful to others starting out.

ML Research & Product

Despite the massive volume of papers today, there are still many underinvested areas as ML scales out. Especially enjoy those where we're just figuring out the machine learning to unlock a novel product spec (PhDs, meet PMs in a world of uncertainty^2), and working across the stack (frontend, ML, data, backend) with a team to launch and beyond. Some projects:

NLP

Co-founded CodeCompose. Per Zuck, CodeCompose is a "generative AI coding assistant that we

built to

help our engineers as they write code. Our longer term goal is to build more AI tools to support our teams across the whole software development process, and hopefully to open source more of these tools as well."

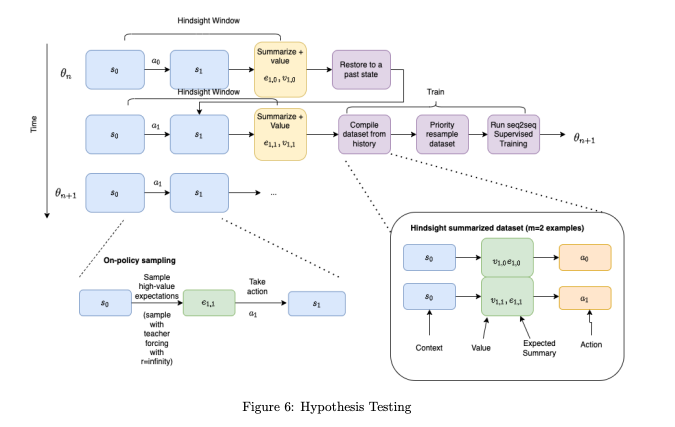

Can contrastive value theory improve our understanding of self-learning in large language models? Initially a weird conjecture, now gaining increasing experimental evidence and showing up in frontier AI and LLMs.

Unlocking human potential via personalized language learning modules for job seekers, powered by

AI. 2 years of hard ML x early product and engineering. Launched in Indonesia (2020). Descendants now launched in Argentina, Colombia, India (Hindi), Indonesia, Mexico, and Venezuela (2023).

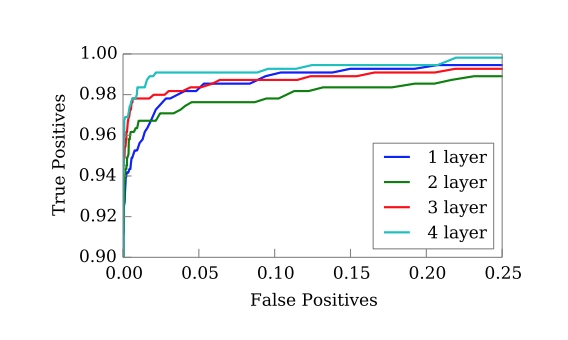

Keyword spotting, voice activity detection and large-vocabulary speech recognition used to be treated as separate tasks. We published a model which does them all in a single architecture and with less retraining, which also happens to 2x VAD accuracy.

In the past, I've also published on speech recognition at Stanford (see Publications).

Healthcare

Supporting women through the menopause transition. Co-founded the company (thanks to Khosla for an incubating EIR opportunity), wrote the first website and mission statement, scaled the team from 2 to 5, talked to a bunch of clients. This ran for several years with an even larger team, and helped attract significant attention to the space that continues to this day.

It used to be that we didn't know much about health between doctor's visits. Shipped the first ML models for continuous pulse tracking for the Baseline watch for 10,000 volunteers to track pulse over all activities, including through noise like running, walking and outdoor conditions (a Google X-backed project).

Investment

Art pricing has small datasets and an exponentially-scaled output space, which makes it challenging for

models found in much of the ML literature. Shipped a completely new neural architecture in a month

which contributed ~X0% relative increase in accuracy and requires fewer features than prior models. Was

adopted by Arthena and descendants were still in use in 2021 (Arthena was acquired by Masterworks in

2023).